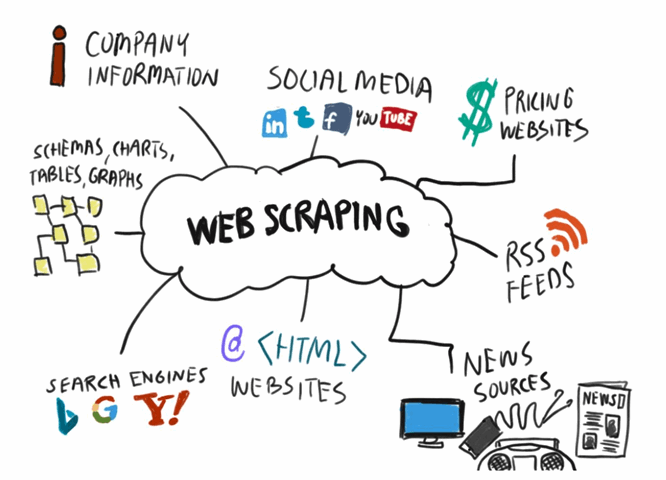

Web scraping and data extraction have become indispensable tools for SEO-1/">SEO professionals. By automating the process of gathering data from websites, these techniques allow marketers to analyze trends, track competitors, and identify opportunities for improvement. However, web scraping also raises ethical and legal questions, making it essential to approach this strategy responsibly. This guide explores how web scraping can enhance your SEO efforts and outlines best practices to ensure compliance and success.

What is Web Scraping?

Web scraping is the process of using automated tools or scripts to extract data from websites. This data can include everything from keyword rankings and backlink profiles to competitor pricing and customer reviews. By collecting and analyzing this information, businesses can make data-driven decisions to improve their online visibility and performance.

Benefits of Web Scraping for SEO

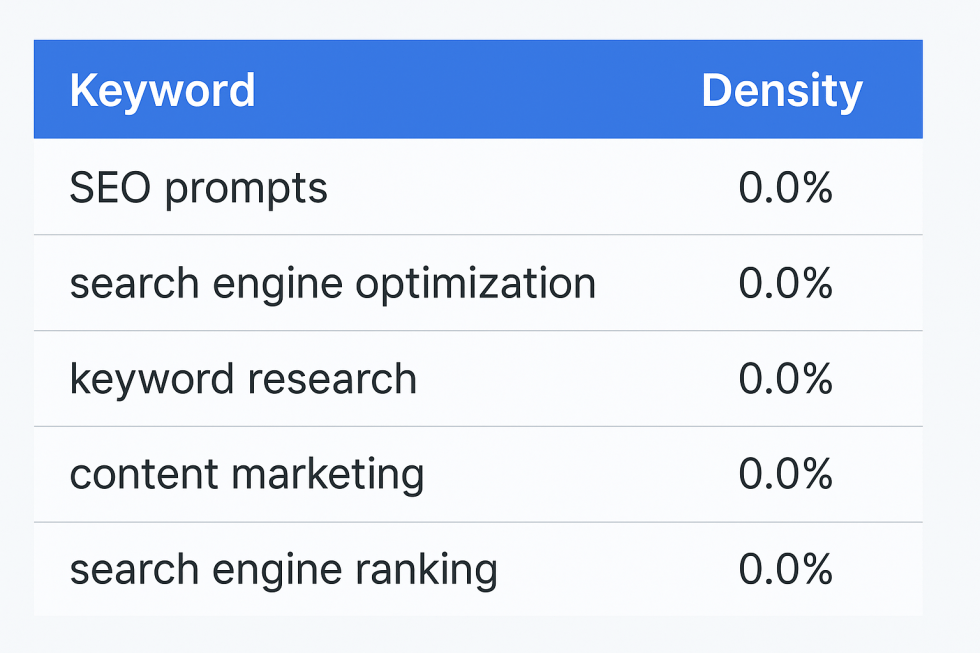

1. Keyword Research

Web scraping tools can gather keyword data from search engine results pages (SERPs), helping you identify high-performing terms and long-tail keywords that resonate with your target audience.

2. Competitor Analysis

Scraping competitor websites can reveal insights into their content strategies, backlink profiles, and ranking keywords, allowing you to refine your own SEO tactics.

3. Content Optimization

By analyzing top-ranking pages, you can extract data on meta tags, headings, and content length to identify patterns and optimize your own content.

4. Backlink Opportunities

Web scraping helps identify websites linking to your competitors, giving you potential backlink opportunities to boost your own domain authority.

5. SERP Monitoring

Regularly scraping SERPs allows you to track your rankings, monitor featured snippets, and stay informed about algorithm updates that affect your position.

Tools for Web Scraping

1. Scrapy

Scrapy is an open-source Python framework for web scraping. Its flexibility and scalability make it a popular choice among SEO professionals.

2. Beautiful Soup

Another Python-based tool, Beautiful Soup simplifies the process of parsing HTML and XML documents, making it easier to extract the data you need.

3. Octoparse

Octoparse is a user-friendly tool that offers a visual interface for building scraping workflows without coding expertise.

4. Google Sheets and APIs

For simpler projects, Google Sheets combined with APIs can be a lightweight and effective solution for extracting and organizing data.

Ethical and Legal Considerations

While web scraping can provide valuable insights, it's crucial to follow ethical guidelines and respect legal boundaries to avoid potential penalties.

Respect Robots.txt

Many websites include a robots.txt file that specifies which parts of the site can or cannot be scraped. Always check and adhere to these directives.

Avoid Overloading Servers

Scraping too frequently or sending excessive requests can overwhelm a server, potentially causing downtime. Use rate limits and avoid disruptive behavior.

Seek Permission

Whenever possible, reach out to website owners to request permission for data scraping. This can help establish trust and prevent legal issues.

Protect User Data

Ensure that your scraping activities do not compromise user privacy or violate data protection regulations such as GDPR or CCPA.

Challenges of Web Scraping for SEO

1. Dynamic Content

Many modern websites use JavaScript to load content dynamically, making it more challenging to extract data with traditional scraping tools.

2. CAPTCHAs and Bot Detection

Websites often implement CAPTCHAs or other anti-bot measures to prevent automated scraping, requiring advanced techniques to bypass.

3. Data Cleaning

Scraped data often contains inconsistencies or unnecessary information, requiring additional effort to clean and format it for analysis.

4. Legal Risks

Non-compliance with website terms of service or data protection laws can result in legal action, fines, or other penalties.

Best Practices for Web Scraping

Use Reliable Tools

Choose tools that are well-supported and capable of handling complex scraping tasks while respecting website rules.

Set Rate Limits

Avoid sending too many requests in a short period. Implement rate limits to prevent overloading servers and triggering anti-bot measures.

Focus on Publicly Available Data

Scrape only publicly accessible information and avoid extracting sensitive or proprietary content.

Monitor Changes

Websites frequently update their structures, which can break your scraping workflows. Regularly monitor and adapt your scripts to accommodate changes.

Alternatives to Web Scraping for SEO

For those who wish to avoid the complexities and risks of web scraping, consider these alternatives:

Use APIs

Many platforms, such as Google, Ahrefs, and SEMrush, offer APIs that provide structured access to data without violating terms of service.

Manual Research

While time-consuming, manual research can be effective for smaller-scale projects or when scraping is not feasible.

Third-Party Tools

Leverage SEO tools that aggregate and analyze data for you, eliminating the need for custom scraping workflows.

Conclusion

Web scraping and data extraction are powerful techniques for enhancing your SEO strategy. From competitor analysis to keyword research, these methods provide insights that can drive better decision-making and improve search engine rankings. However, it's essential to approach web scraping responsibly, respecting ethical and legal considerations. By using the right tools, following best practices, and exploring alternatives when necessary, you can harness the power of data to achieve your SEO goals.

📧 Stay Updated

Get the latest web development tips and insights delivered to your inbox.